Causality

\( \newcommand{\states}{\mathcal{S}} \newcommand{\actions}{\mathcal{A}} \newcommand{\observations}{\mathcal{O}} \newcommand{\rewards}{\mathcal{R}} \newcommand{\traces}{\mathbf{e}} \newcommand{\transition}{P} \newcommand{\reals}{\mathbb{R}} \newcommand{\naturals}{\mathbb{N}} \newcommand{\complexs}{\mathbb{C}} \newcommand{\field}{\mathbb{F}} \newcommand{\numfield}{\mathbb{F}} \newcommand{\expected}{\mathbb{E}} \newcommand{\var}{\mathbb{V}} \newcommand{\by}{\times} \newcommand{\partialderiv}[2]{\frac{\partial #1}{\partial #2}} \newcommand{\defineq}{\stackrel{{\tiny\mbox{def}}}{=}} \newcommand{\defeq}{\stackrel{{\tiny\mbox{def}}}{=}} \newcommand{\eye}{\Imat} \newcommand{\hadamard}{\odot} \newcommand{\trans}{\top} \newcommand{\inv}{{-1}} \newcommand{\argmax}{\operatorname{argmax}} \newcommand{\Prob}{\mathbb{P}} \newcommand{\avec}{\mathbf{a}} \newcommand{\bvec}{\mathbf{b}} \newcommand{\cvec}{\mathbf{c}} \newcommand{\dvec}{\mathbf{d}} \newcommand{\evec}{\mathbf{e}} \newcommand{\fvec}{\mathbf{f}} \newcommand{\gvec}{\mathbf{g}} \newcommand{\hvec}{\mathbf{h}} \newcommand{\ivec}{\mathbf{i}} \newcommand{\jvec}{\mathbf{j}} \newcommand{\kvec}{\mathbf{k}} \newcommand{\lvec}{\mathbf{l}} \newcommand{\mvec}{\mathbf{m}} \newcommand{\nvec}{\mathbf{n}} \newcommand{\ovec}{\mathbf{o}} \newcommand{\pvec}{\mathbf{p}} \newcommand{\qvec}{\mathbf{q}} \newcommand{\rvec}{\mathbf{r}} \newcommand{\svec}{\mathbf{s}} \newcommand{\tvec}{\mathbf{t}} \newcommand{\uvec}{\mathbf{u}} \newcommand{\vvec}{\mathbf{v}} \newcommand{\wvec}{\mathbf{w}} \newcommand{\xvec}{\mathbf{x}} \newcommand{\yvec}{\mathbf{y}} \newcommand{\zvec}{\mathbf{z}} \newcommand{\Amat}{\mathbf{A}} \newcommand{\Bmat}{\mathbf{B}} \newcommand{\Cmat}{\mathbf{C}} \newcommand{\Dmat}{\mathbf{D}} \newcommand{\Emat}{\mathbf{E}} \newcommand{\Fmat}{\mathbf{F}} \newcommand{\Gmat}{\mathbf{G}} \newcommand{\Hmat}{\mathbf{H}} \newcommand{\Imat}{\mathbf{I}} \newcommand{\Jmat}{\mathbf{J}} \newcommand{\Kmat}{\mathbf{K}} \newcommand{\Lmat}{\mathbf{L}} \newcommand{\Mmat}{\mathbf{M}} \newcommand{\Nmat}{\mathbf{N}} \newcommand{\Omat}{\mathbf{O}} \newcommand{\Pmat}{\mathbf{P}} \newcommand{\Qmat}{\mathbf{Q}} \newcommand{\Rmat}{\mathbf{R}} \newcommand{\Smat}{\mathbf{S}} \newcommand{\Tmat}{\mathbf{T}} \newcommand{\Umat}{\mathbf{U}} \newcommand{\Vmat}{\mathbf{V}} \newcommand{\Wmat}{\mathbf{W}} \newcommand{\Xmat}{\mathbf{X}} \newcommand{\Ymat}{\mathbf{Y}} \newcommand{\Zmat}{\mathbf{Z}} \newcommand{\Sigmamat}{\boldsymbol{\Sigma}} \newcommand{\identity}{\Imat} \newcommand{\epsilonvec}{\boldsymbol{\epsilon}} \newcommand{\thetavec}{\boldsymbol{\theta}} \newcommand{\phivec}{\boldsymbol{\phi}} \newcommand{\muvec}{\boldsymbol{\mu}} \newcommand{\sigmavec}{\boldsymbol{\sigma}} \newcommand{\jacobian}{\mathbf{J}} \newcommand{\ind}{\perp!!!!\perp} \newcommand{\bigoh}{\text{O}} \)

- tags

- Statistics, Machine Learning, AI

This note will talk about the Statistics and AI notions of causality. This will also host as a place to link to various resources and notes on these resources.

Common Cause principle

It is well known that statistical properties alone do not determine causal structures.

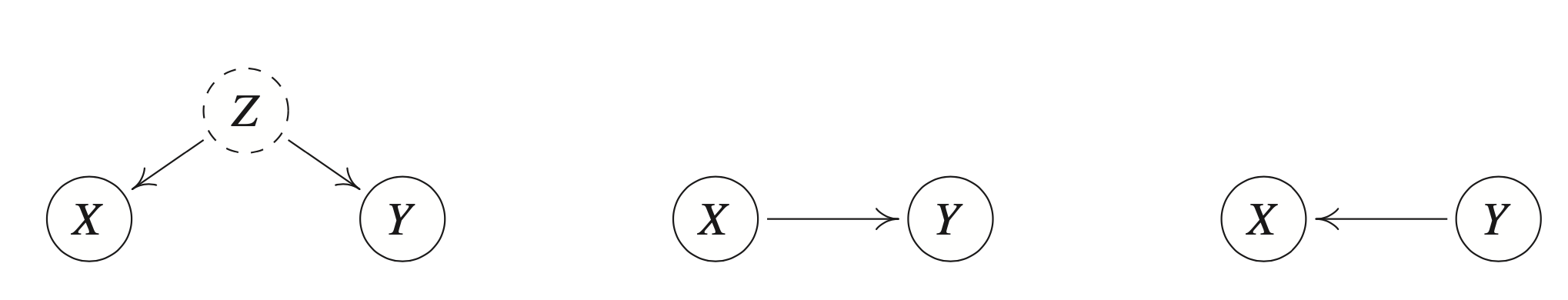

Figure 1: Reichenbach’s common cause principle establishes a link between statistical properties and causal structures. A statistical dependence between two observables (X) and (Y) indicates taht they are caused by a (potentially new) variable (Z). In the figures cause is denoted through arrows.

Reichenbach’s common cause principle:

If two random variables X and Y are statistically dependent, then there exists a third variable Z that causally influences both. (As a special case, Z may coincide with either X or Y.) Furthermore, this variable Z screens X and Y from each other in the sense that given Z, they become independent.

While this principle lays out a primal causal model, estimating such a model from data (especially if the data isn’t causally sufficient) is extremely difficult and in many cases the model is not identifiable. While this should give us pause into the motivations of using causality in machine learning, making assumptions about the data generation process without hard assumptions on the agent’s used internal Causal Model might produce powerful techniques or insights into designing algorithms. This direction might also provide groundwork for understanding when problems in perception are hopeless (Scholkopf et al. 2012). With this in mind we will cautiously move forward.

Terms and special cases

Disentangled model

https://arxiv.org/abs/2102.11107

Independent Causal Mechanisms Principle

Markov Factorizations

Domain adaptation

Classes of Causal models

Causal hierarchy

Some assumptions for tractability

These are defined under a simple functional causal model where \(C\) is a cause variable (with id noise \(N_C\)), the function \(\psi\) is a deterministic mechanism, and \(E = \psi(C, N_E)\) with \(N_E\) is independent noise.

Causal Sufficiency

Assuming two independent noise variables \(N_C\) and \(N_E\) with random variables with distributions \(P(N_C)\) and \(P(N_E)\). The function \(\phi\) and the noise \(N_E\) jointly determine \(P(E|C)\) via \(E=\phi(C, N_E)\). This conditional is thought as the mechanism transforming cause \(C\) into effect \(E\).

Independence of mechanism and input

The mechanism \(\psi\) is independent of the distribution of the cause.

Richness of functional causal models

Two-variable functional causal models are so “rich” that the causal direction cannot be inferred.

Additive noise models

The additive noise model assumes for some function \(\phi\)

\[ E = \phi( C) + N_E \]

The importance of this is that under usual conditions (i.e. if \(\phi\) is linear and \(N_E\) was gaussian), two real-valued random variables X and Y can be fit by an ANM model in at most one direction (which is considered the causal direction).

The Causal Markov condition

Let \(G\) be a causal graph with vertices \(\mathbf{V}\) and \(P\) be a probability distribution over the vertices in \(\mathbf{V}\) generated by the causal structure represented by \(G\). \(G\) and \(P\) satisfy the causal markov condition if and only if for every \(W \in \mathbf{V}\), \(W\) is independent of \(V(\text{\bf Descendants}(W) \cup \text{\bf Parents}(W))\) given \(\text{\bf Parents}(W)\).

The Causal Minimality Condition

The Faithfulness Condition

Causal Model

As it relates to time series

Granger Causality

Questions

Inferring the causal model from data.

Say we observe the data from the toy examples in inference’s blog. How do you disentangle this to find the causal mechanisms? Are you able to run something through the function \(\phi\)? This seem unrealistic, especially if you just have the data…

Counterfactuals aren’t testable

If we are using a causal model to build some type of way to do counterfactual reasoning, how can we measure performance of models? In toy domains it is easy to do generate the correct distributions, but my time in RL has taught me that nothing is so simple when moving beyond toy problems. (i.e. distribution shifts can have major implications for the space the agent is in vs where it has seen before). Acting in the world begets distributional change, which effects the original problem. Does causal inference reason about these distributional shifts? What are the knock on effects?

Inferring which causal model is correct from data

Given the toy examples and two causal models, can we infer which model is correct given the observed data. I suspect the answer to this is no, and we need to do interventions to uncover the actual causal model. But then I’m confused by the motivation. We are motivated to learn the causal structure of a problem w/o being able to do A/B testing… If we can’t uncover the causal structure w/o intervention and A/B testing, then what is the point of causal inference?

Apparently this can be done w/ additive noise models and nonlinear causal relationships with an extremely simple mechanism described by (Hoyer et al. 2009). (i.e. do n regressions and check the causal relationship based on the correlation with the resulting irreducible error).

Is the independence of mechanism and input a reasonable assumption for learning systems?

I’m reminded of weapons of math destruction. Can we assume the underlying mechanisms for generating effects are independent of the distributions we use? I guess you can always separate out the different functions for different variables, i.e. instead of \(C \rightarrow_\psi E\) you have \((C_1, C_2, C_3, \ldots) \rightarrow_\psi E\). So in this instance we will have a multi-input causal structure.

Is the restriction to non-linear w/ additive gaussian noise true, or is it just invertability of the causal mechanism?

Can we infer between various causal graphs with un-seen latent causes?

How does the causal inference in (Hoyer et al. 2009) depend on model performance?

Causal State Representations

Reading list

Causal state representations

https://arxiv.org/pdf/1906.10437.pdf

Learning Causal State Representations of Partially Observable Environments

https://arxiv.org/abs/2102.11107

Causal Effect Identifiability under Partial-Observability

http://proceedings.mlr.press/v119/lee20a.html

https://arxiv.org/abs/2106.04619

Nonlinear causal discovery with additive noise models

https://papers.nips.cc/paper/2008/file/f7664060cc52bc6f3d620bcedc94a4b6-Paper.pdf